United States

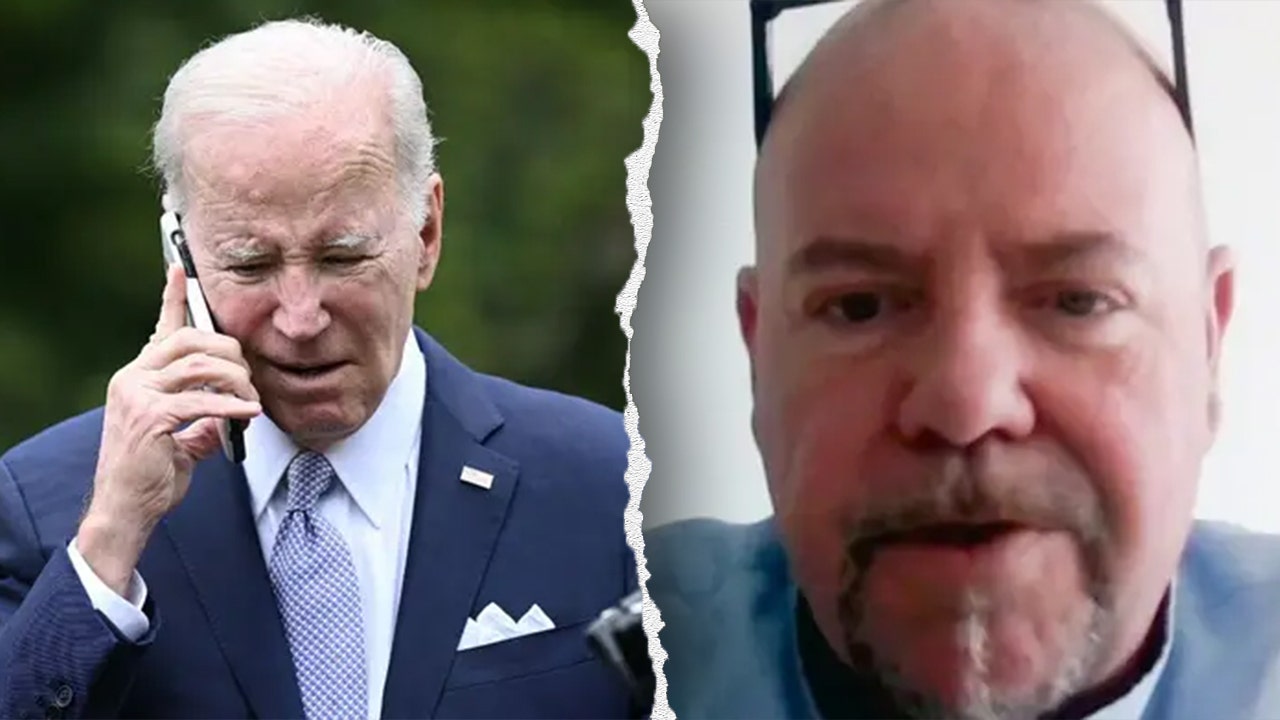

Trial begins for political consultant accused of sending AI-generated robocalls mimicking Biden

The trial of Democratic political consultant Steve Kramer has begun, marking a significant and unprecedented case involving the use of artificial intelligence (AI) in political campaigning. Kramer, who has admitted to orchestrating a campaign of AI-generated robocalls mimicking President Joe Biden, is facing severe consequences for his actions. These calls, which were sent to thousands of voters in New Hampshire ahead of the 2024 primary, were designed to discourage people from participating in the election. The case has sparked widespread attention due to its use of deepfake technology, raising critical questions about the ethical and legal implications of AI in politics. Kramer now faces more than two dozen criminal charges and a potential $6 million fine proposed by the Federal Communications Commission (FCC), the first such penalties involving AI technology.

The events unfolded just two days before the New Hampshire primary on January 23, a contest that was already contentious due to its timing. New Hampshire had set its primary date out of compliance with the Democratic National Committee’s (DNC) 2024 presidential nominating calendar, resulting in President Biden not being on the state’s ballot. Despite this, Granite State Democrats launched a write-in campaign to support Biden, aiming to prevent electoral embarrassment for the president as he sought a second term. It was within this context that Kramer hired a magician, Paul Carpenter, to create a deepfake audio recording of Biden’s voice. The fake message, transmitted through robocalls, urged voters not to participate in the primary, claiming that their votes would be more impactful in the November general election rather than in the unsanctioned primary.

The calls appeared to come from Kathy Sullivan, the former chair of the New Hampshire Democratic Party, who was leading the pro-Biden write-in campaign at the time. The recordings obtained by NBC News captured a voice mimicking Biden, stating, “What a bunch of malarkey. You know the value of voting Democratic when our votes count. It’s important that you save your vote for the November election… Voting this Tuesday only enables Republicans in their quest to elect Donald Trump again. Your vote makes a difference in November, not this Tuesday.” Authorities estimate that the calls reached between 5,000 and 25,000 people, raising concerns about voter suppression and the potential for widespread misinformation in future elections. Kramer has since admitted to orchestrating the stunt, which he claims was intended to highlight the need for stricter regulations on AI technology. “Maybe I’m a villain today, but I think, in the end, we get a better country and better democracy because of what I’ve done, deliberately,” he said in a previous interview.

The legal fallout from Kramer’s actions has been swift and severe. The FCC has proposed a $6 million fine against him, while the company accused of transmitting the calls, Lingo Telecom, faces a $2 million fine. Both parties could still settle or negotiate the penalties, but the charges mark a significant escalation in the regulation of AI-driven political tactics. Kramer himself is facing 13 felony charges for allegedly violating New Hampshire’s law against using misleading information to deter voting, as well as 13 misdemeanor charges for falsely representing himself as a candidate. The charges, filed in four counties, will be prosecuted by the state attorney general’s office, underscoring the seriousness with which authorities are treating the case.

Kramer’s involvement in the scandal has also drawn attention to his broader career as a political operative. A self-described specialist in getting out the vote, he has worked on high-profile campaigns, including former Democratic presidential hopeful Rep. Dean Phillips of Minnesota and rapper Kanye West’s unsuccessful 2020 presidential bid. Phillips has since distanced himself from Kramer, who has claimed he acted alone in orchestrating the robocall campaign. Meanwhile, Paul Carpenter, the New Orleans-based magician who created the deepfake audio, has come forward to explain his role in the scandal. Carpenter stated that he was paid $150 by Kramer to produce the audio, but he denied any malicious intent, insisting he did not know how the recording would be used. “I created the audio used in the robocall. I did not distribute it,” he told NBC. “I was in a situation where someone offered me some money to do something and I did it.”

The investigation into the robocalls has also implicated two Texas-based companies: Life Corp., identified as the source of the calls, and Lingo Telecom, which transmitted them. Lingo Telecom has strongly denied any wrongdoing, calling the FCC’s actions an attempt to impose new rules retroactively. The company emphasized its commitment to regulatory compliance and cooperation with federal and state agencies to identify those responsible for the calls. “Lingo Telecom takes its regulatory obligations extremely seriously and has fully cooperated with federal and state agencies to assist with identifying the parties responsible for originating the New Hampshire robocall campaign,” the company stated. “Lingo Telecom was not involved whatsoever in the production of these calls and the actions it took complied with all applicable federal regulations and industry standards.”

The case has broader implications for the use of AI in politics and the need for stronger safeguards against deepfake technology. As AI becomes increasingly sophisticated, the potential for misuse in manipulating public opinion and undermining democratic processes grows. Kramer’s actions, though unsanctioned and potentially illegal, have raised important questions about the ethical boundaries of political campaigning and the need for greater transparency and accountability in the use of emerging technologies. The outcome of this trial will serve as a benchmark for how governments and regulatory bodies address the challenges posed by AI in the future. In the meantime, the incident serves as a stark reminder of the vulnerabilities in the electoral system and the importance of protecting the integrity of democratic processes in an age of rapid technological advancement.